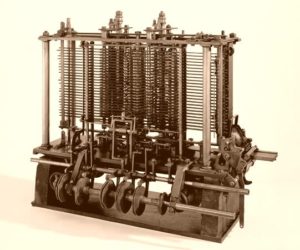

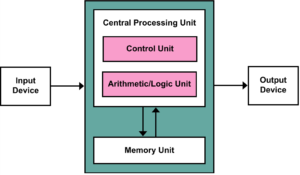

The Von Neumann Architecture designs were fundamental in the design of the first CPU.

It is the piece of hardware within a computer that carries out all the instructions and operations of a computer program by performing the basic arithmetical, logical, and input/output operations of the system.

The CPU uses Fetch>Decode>Execute cycles to process and execute machine code, which is the low level program code which has been translated from high level code so that the computer is able to understand it at a Binary level.

- The CPU Fetches an instruction from Memory

- The CPU Decodes or interprets the instruction

- The CPU Executes the instruction

The speed at which the CPU performs this process is determined by the Clock Speed. This is as it sounds, it acts like a ticking clock. the faster the clock ticks the faster the instructions are fetched, decoded and executed. The clock speed is measured in MHz, and current CPU specification is on average 3400MHz or 3.4GHZ.

The Intel Core i7 6950X is a 3.0GHz cpu which can execute 106 clock cycles per second. i.e 106 instructions processed per second.

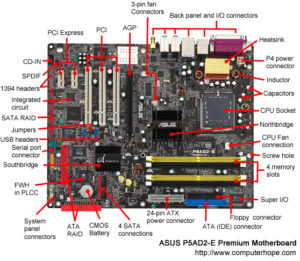

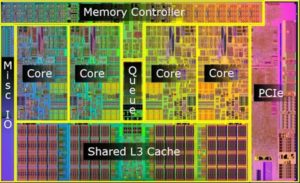

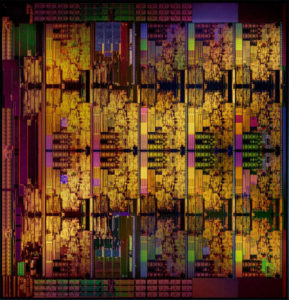

That same i7 6950X as above also has 10 cores. A core is basically the main part of the CPU. This is the section of the chip that does the processing of instructions. it basicall means multiple CPU’s one chip. Due to the overall size of the chip they have had to shrink the architecture down massively to fit them all on. they use a 14 nanometer shrink. to put it into scale 1 nanometer is one billionth of a meter.

Having more cores allows the CPU to execute more instruction not only quicker but simultaneously, speeding up compute time massively.

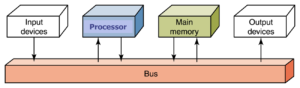

The fastest memory available in a computer is the registers and ‘level 1 cache’ memory built into each CPU core, but even with modern processors the amount of data that can be stored here is very limited. Elsewhere on the CPU chip, and connected to the rest of the computer via the bus is the level 2 cache – larger than the level 1 cache, and somewhat slower, but still much faster to access than the main memory.

The Registers and Cache memory allow the CPU a temp storage area, which it is able to park data that needs to be processed. The proximity to the CPU cores is as close as it can get meaning that it is super fast, allowing it to be addressed incredibly fast.